GitHub - glonlas/Tensorflow-Intel-Atom-CPU: Tensorflow compiled for a Intel(R) Atom(TM) CPU C2338 @ 1.74GHz (Silvermont) on Ubuntu 18.04

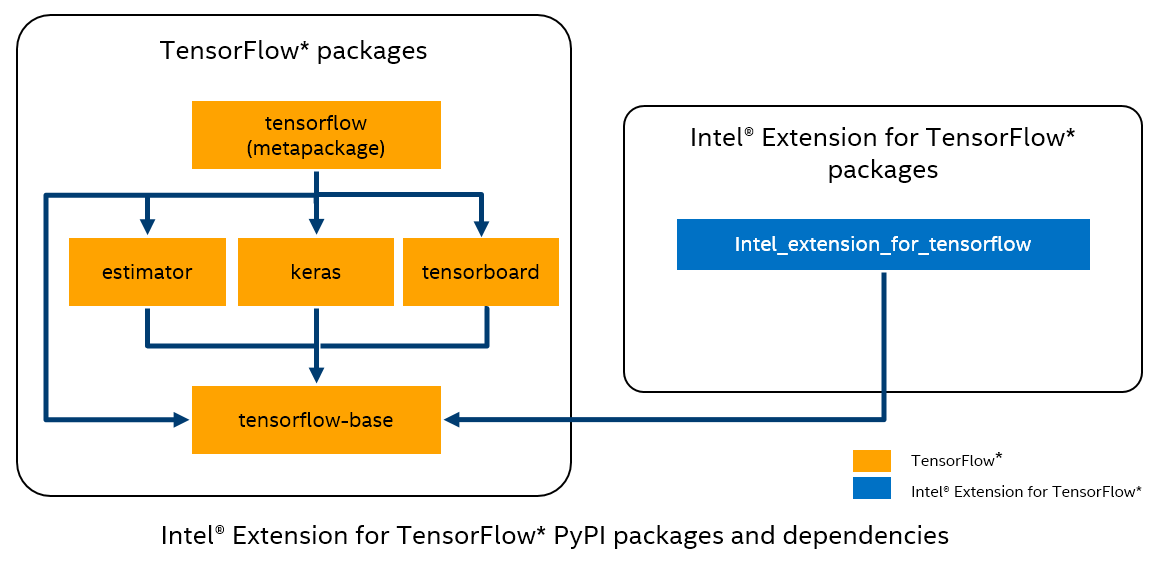

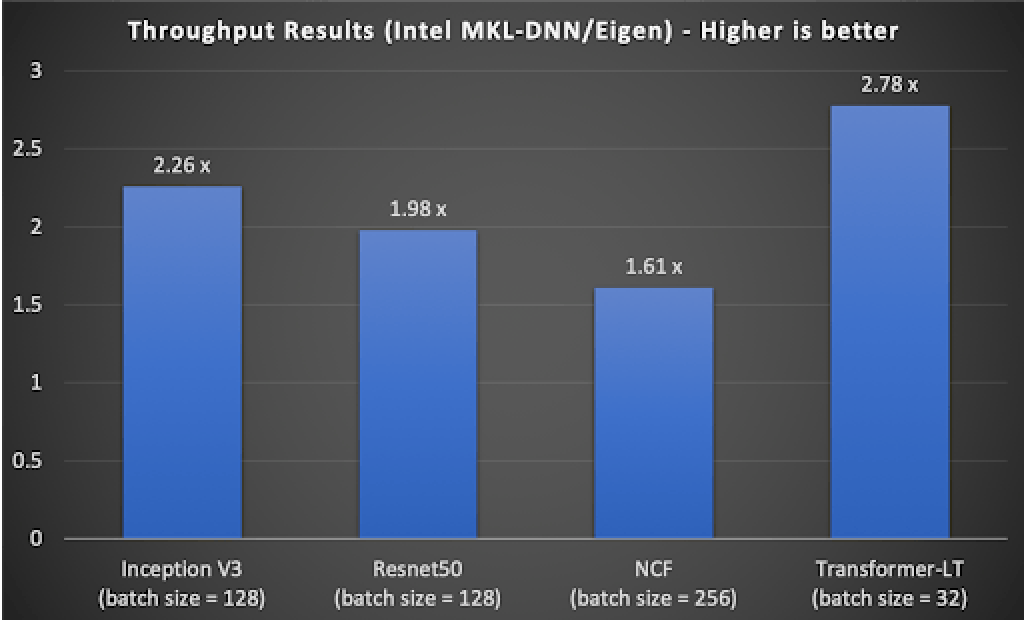

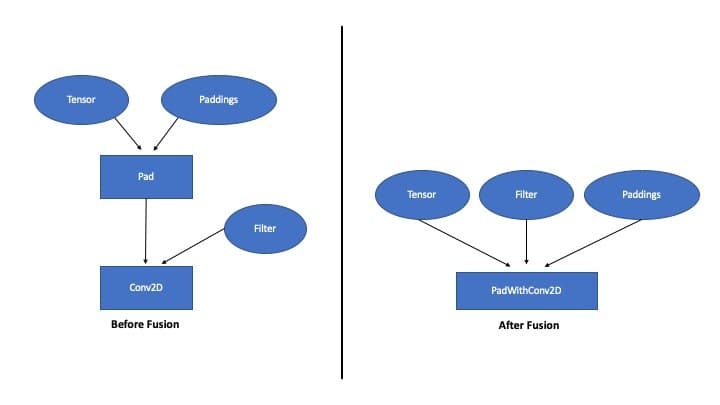

Leverage Intel Deep Learning Optimizations in TensorFlow | by Intel Tech | Intel Analytics Software | Medium

Leverage Intel Deep Learning Optimizations in TensorFlow | by Intel Tech | Intel Analytics Software | Medium

Accelerating AI performance on 3rd Gen Intel® Xeon® Scalable processors with TensorFlow and Bfloat16 — The TensorFlow Blog

You can now run Machine learning with Tensorflow with Intel iGPU on Windows and WSL if your Intel iGPU driver has DirectML support. : r/intel